Authors: Nay Myat Min, Long H. Pham, Jun Sun

Published on: May 23, 2024

Impact Score: 7.8

Arxiv code: Arxiv:2405.14781

Summary

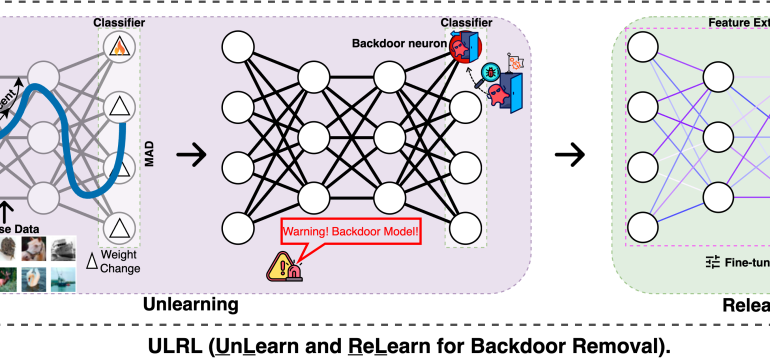

- What is new: Introduces ULRL for backdoor removal in neural networks, which is effective against various types of backdoors using only a small set of clean samples.

- Why this is important: Neural backdoors in security-critical applications, allowing attackers to alter model behavior.

- What the research proposes: ULRL method, which involves unlearning to identify suspicious neurons and targeted weight tuning for mitigation.

- Results: ULRL outperforms existing methods in eliminating backdoors across 12 different types, while preserving model utility.

Technical Details

Technological frameworks used: UnLearn and ReLearn (ULRL)

Models used: Deep neural networks

Data used: Small set of clean samples

Potential Impact

Cybersecurity providers, companies relying on AI for critical applications

Want to implement this idea in a business?

We have generated a startup concept here: SecureNeuro.

Leave a Reply