Authors: Adibvafa Fallahpour, Mahshid Alinoori, Arash Afkanpour, Amrit Krishnan

Published on: May 23, 2024

Impact Score: 7.4

Arxiv code: Arxiv:2405.14567

Summary

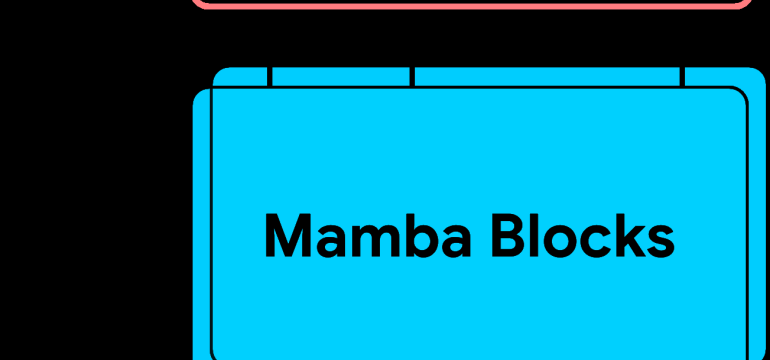

- What is new: Introduction of EHRMamba, built on the Mamba architecture with linear computational cost and Multitask Prompted Finetuning (MTF).

- Why this is important: Limited real-world deployment of Transformers in healthcare due to high computational costs, lack of flexibility, and complex maintenance.

- What the research proposes: EHRMamba uses a novel MTF method and leverages linear computational cost architecture to handle long sequences and simplify multitask learning.

- Results: State-of-the-art performance across 6 major clinical tasks and superior EHR forecasting with evaluations done using the MIMIC-IV dataset.

Technical Details

Technological frameworks used: Mamba architecture, HL7 FHIR data standard

Models used: EHRMamba

Data used: MIMIC-IV dataset

Potential Impact

Healthcare providers employing EHR systems, companies specializing in healthcare analytics, developers of clinical decision support systems

Want to implement this idea in a business?

We have generated a startup concept here: HealthMamba.

Leave a Reply