Authors: Pingchuan Ma, Tsun-Hsuan Wang, Minghao Guo, Zhiqing Sun, Joshua B. Tenenbaum, Daniela Rus, Chuang Gan, Wojciech Matusik

Published on: May 16, 2024

Impact Score: 7.4

Arxiv code: Arxiv:2405.09783

Summary

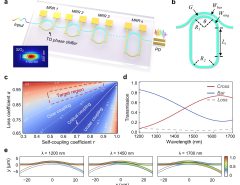

- What is new: Introduction of the Scientific Generative Agent (SGA), a bilevel optimization framework that combines the abstract reasoning capabilities of Large Language Models (LLMs) with the computational strength of simulations.

- Why this is important: Large Language Models struggle in simulating observational feedback and grounding it with language for advancements in physical scientific discovery.

- What the research proposes: SGA framework integrates LLMs for generating hypotheses and utilizes simulations for providing observational feedback and optimizing discrete and continuous scientific components.

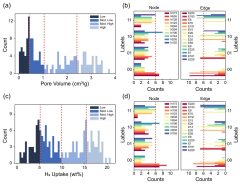

- Results: The framework demonstrated its efficacy in constitutive law discovery and molecular design, producing novel solutions that are both unexpected and analytically coherent.

Technical Details

Technological frameworks used: Scientific Generative Agent (SGA), a bilevel optimization model

Models used: Large Language Models (LLMs)

Data used: Data from physics equations and molecular structures

Potential Impact

Pharmaceuticals, materials science, academic research institutions, and companies involved in scientific R&D could be significantly impacted.

Want to implement this idea in a business?

We have generated a startup concept here: SciGenix.

Leave a Reply