Authors: Weifeng Lin, Ziheng Wu, Wentao Yang, Mingxin Huang, Lianwen Jin

Published on: October 09, 2023

Impact Score: 7.8

Arxiv code: Arxiv:2310.05393

Summary

- What is new: Introduction of Hierarchical Side-Tuning (HST), a novel Parameter-Efficient Transfer Learning method that uses a Hierarchical Side Network to leverage multi-scale features of ViTs for diverse visual tasks.

- Why this is important: Need for a cost-effective and comprehensive fine-tuning approach for Vision Transformers across various complex visual tasks.

- What the research proposes: Employment of HST that utilizes intermediate activations from the ViT backbone, integrated into a side network to improve performance on complex tasks.

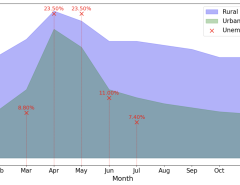

- Results: Achieved state-of-the-art performance in 13 out of 19 tasks on the VTAB-1K benchmark and outperformed existing PETL methods on COCO and ADE20K benchmarks.

Technical Details

Technological frameworks used: Vision Transformers (ViTs)

Models used: Hierarchical Side Network (HSN)

Data used: VTAB-1K, COCO, ADE20K benchmarks

Potential Impact

Impacts tech firms focusing on AI-driven image analysis, machine vision technology providers, and sectors employing advanced visual recognition systems.

Want to implement this idea in a business?

We have generated a startup concept here: VisionaryBoost.

Leave a Reply