Authors: Fatema Tuj Johora Faria, Mukaffi Bin Moin, Pronay Debnath, Asif Iftekher Fahim, Faisal Muhammad Shah

Published on: May 12, 2024

Impact Score: 7.4

Arxiv code: Arxiv:2405.07338

Summary

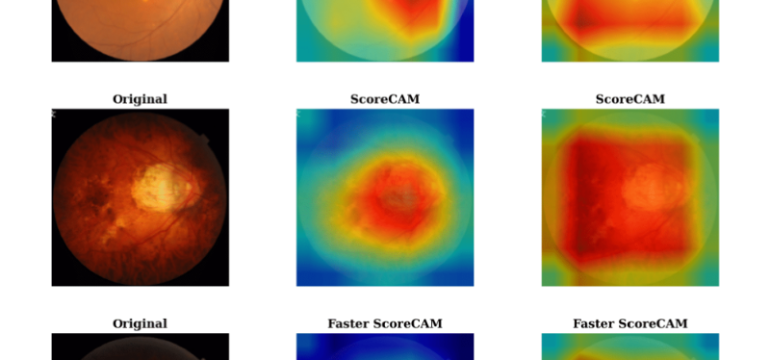

- What is new: Utilizes Explainable AI techniques for enhanced transparency in disease detection models, expanding the number of models and architectures used for fundus image analysis.

- Why this is important: Existing methods for automatic segmentation of retinal blood vessels lack discrimination power and are influenced by pathological regions, hindering early disease diagnosis.

- What the research proposes: Applying deep learning models with advanced attention mechanisms and a variety of backbones for better performance, coupled with Explainable AI for improved interpretability.

- Results: ResNet101 had the highest accuracy among classification models at 94.17%, and Swin-Unet displayed the highest Mean Pixel Accuracy in segmentation tasks at 86.19%.

Technical Details

Technological frameworks used: Explainable AI Techniques (Grad-CAM, Grad-CAM++, Score-CAM, Faster Score-CAM, Layer CAM), Deep learning architectures

Models used: CNN models (ResNet50V2, ResNet101V2, ResNet152V2, DenseNet121, EfficientNetB0), TransUNet, Attention U-Net, Swin-Unet

Data used: fundus images

Potential Impact

Healthcare providers, diagnostic imaging centers, AI healthcare startups, medical imaging tech companies

Want to implement this idea in a business?

We have generated a startup concept here: VisioNet.

Leave a Reply