Authors: Yuxia Wang, Minghan Wang, Hasan Iqbal, Georgi Georgiev, Jiahui Geng, Preslav Nakov

Published on: May 09, 2024

Impact Score: 7.2

Arxiv code: Arxiv:2405.05583

Summary

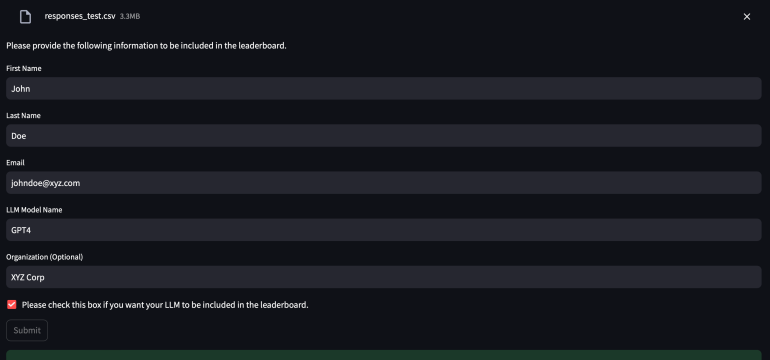

- What is new: OpenFactCheck introduces a unified framework to evaluate the factuality of large language models’ outputs across various domains using a novel approach.

- Why this is important: The challenge of verifying the factual accuracy of responses generated by large language models in open-domain settings and the lack of standard benchmarks for comparison.

- What the research proposes: The OpenFactCheck framework, which includes CUSTCHECKER for custom fact-checking, LLMEVAL for unified evaluation of LLMs’ factuality, and CHECKEREVAL for measuring fact-checkers’ reliability.

- Results: OpenFactCheck provides a comprehensive tool for accurately assessing and improving the factual correctness of large language models in multiple contexts.

Technical Details

Technological frameworks used: OpenFactCheck framework with CUSTCHECKER, LLMEVAL, and CHECKEREVAL modules

Models used: Large Language Models (LLMs)

Data used: Human-annotated datasets for evaluating fact-checker reliability

Potential Impact

This research could impact markets and companies involved in AI technologies, content generation, information verification services, and sectors relying on accurate data-driven decision-making.

Want to implement this idea in a business?

We have generated a startup concept here: FactAssure.

Leave a Reply