Authors: Lena Armstrong, Abbey Liu, Stephen MacNeil, Danaë Metaxa

Published on: May 07, 2024

Impact Score: 7.8

Arxiv code: Arxiv:2405.04412

Summary

- What is new: This paper uniquely applies a direct audit of race and gender biases in OpenAI’s GPT-3.5 within the context of hiring practices, building on traditional offline resume audits.

- Why this is important: The potential for large language models to reflect or exacerbate social biases and stereotypes in hiring.

- What the research proposes: Conducting studies that include resume assessment and generation to investigate biases in GPT-3.5 related to race and gender.

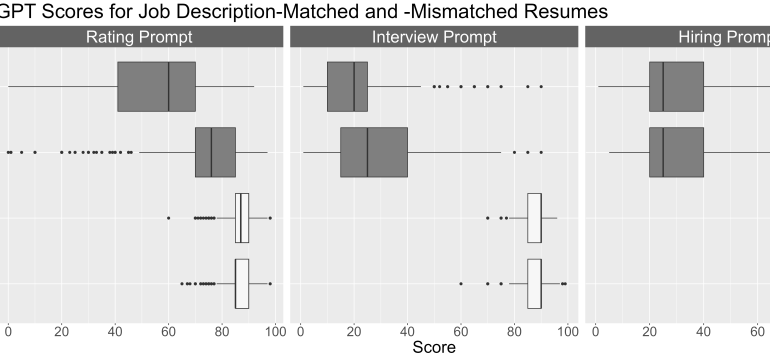

- Results: GPT-3.5 exhibited biases in both tasks; in resume assessments, biases appeared in ratings based on race and gender stereotypes, and in resume generation, it produced resumes with systemic bias markers related to gender, race, language, and geographic background.

Technical Details

Technological frameworks used: Algorithm audit methodology

Models used: OpenAI’s GPT-3.5

Data used: Resumes generated and assessed using varied names to cue different genders and races.

Potential Impact

HR technology firms, companies utilizing AI for hiring, and diversity and inclusion consulting services.

Want to implement this idea in a business?

We have generated a startup concept here: EquiHire.

Leave a Reply