Authors: Xinyi Li, Yongfeng Zhang, Edward C. Malthouse

Published on: May 06, 2024

Impact Score: 8.0

Arxiv code: Arxiv:2405.01593

Summary

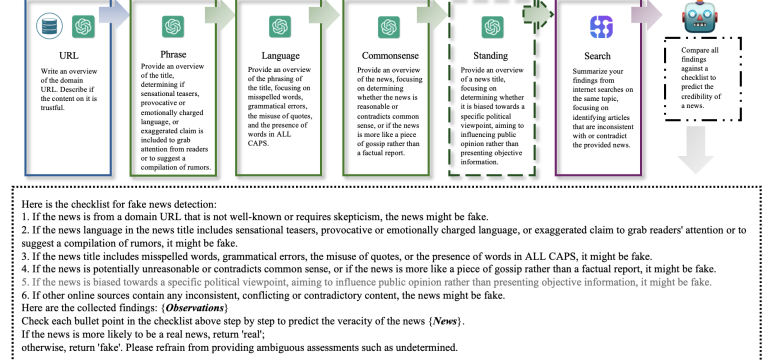

- What is new: Introduces FactAgent, an agentic approach that uses large language models (LLMs) for fake news detection by emulating human expert behavior and providing a structured workflow for verifying news claims.

- Why this is important: The rapid spread of misinformation online poses significant challenges to societal well-being and democracy.

- What the research proposes: FactAgent enables LLMs to verify news claims efficiently without any model training, utilizing a step-by-step workflow for checking news veracity and integrating findings for final decision.

- Results: Experimental studies show FactAgent’s effectiveness in claim verification with enhanced efficiency and transparency compared to manual verification.

Technical Details

Technological frameworks used: FactAgent workflow

Models used: Pre-trained large language models (LLMs)

Data used: Varied, based on internal knowledge or external tools used in the workflow

Potential Impact

This research could impact various sectors including social media platforms, news organizations, and companies involved in digital trust and safety, potentially benefiting those investing in automation and AI-enhanced verification processes.

Want to implement this idea in a business?

We have generated a startup concept here: VeriTruth.

Leave a Reply