Authors: Xiaomin Yu, Yezhaohui Wang, Yanfang Chen, Zhen Tao, Dinghao Xi, Shichao Song, Simin Niu

Published on: May 03, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2405.00711

Summary

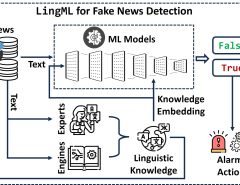

- What is new: A new taxonomy for Fake Artificial Intelligence Generated Content (FAIGC) categorization and comprehensive detection methods.

- Why this is important: The challenge in distinguishing between genuine content and FAIGC, given its high authenticity and widespread use in various daily aspects.

- What the research proposes: Introducing a taxonomy for a better understanding of FAIGC and various detection methods like Deceptive FAIGC Detection, Deepfake Detection, and Hallucination-based FAIGC Detection.

- Results: Effective categorization of FAIGC and identification of detection methods, outlining future research directions.

Technical Details

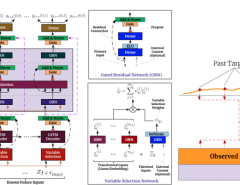

Technological frameworks used: Generative AI models, including Large Language Models (LLMs) and Diffusion Models (DMs)

Models used: Deceptive FAIGC Detection, Deepfake Detection, and Hallucination-based FAIGC Detection models

Data used: AI-generated texts, images, videos, and audio

Potential Impact

Content creation across mediums (text, image, video, audio), cybersecurity firms, and the digital information verification industry.

Want to implement this idea in a business?

We have generated a startup concept here: AuthentiGuard.

Leave a Reply