Authors: Aditya Chakravarty

Published on: May 02, 2024

Impact Score: 8.0

Arxiv code: Arxiv:2405.01004

Summary

- What is new: The paper introduces energy and memory-efficient ASR models optimized for on-device use, surpassing the traditional server-dependent systems in privacy, reliability, and sustainability.

- Why this is important: Traditional ASR systems require extensive server resources, raising privacy, reliability, latency issues, and contributing to high carbon footprints.

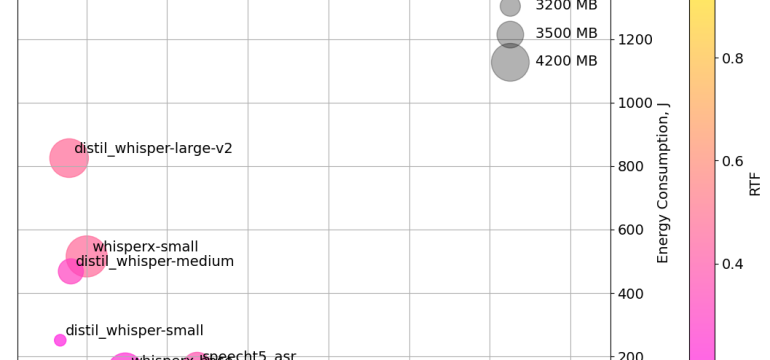

- What the research proposes: This research evaluates the impact of model quantization and memory usage on ASR performance on the NVIDIA Jetson Orin Nano, aiming for an efficient balance between accuracy and energy consumption.

- Results: Reducing precision from FP32 to FP16 halves energy consumption with minimal performance loss. Model size doesn’t always correlate with resilience to noise or predict energy use, providing insights for optimizing on-device ASR.

Technical Details

Technological frameworks used: NVIDIA Jetson Orin Nano

Models used: Transformer-based ASR models with FP32, FP16, and INT8 quantization

Data used: Clean and noisy datasets

Potential Impact

Companies in the ASR market, especially those focusing on privacy-centric, reliable, and energy-efficient voice recognition technologies, could benefit. This includes tech companies developing smart devices, IoT applications, and sustainability-focused tech solutions.

Want to implement this idea in a business?

We have generated a startup concept here: EcoVoice.

Leave a Reply