Authors: Jiayuan Wang, Q. M. Jonathan Wu, Ning Zhang

Published on: October 02, 2023

Impact Score: 7.6

Arxiv code: Arxiv:2310.01641

Summary

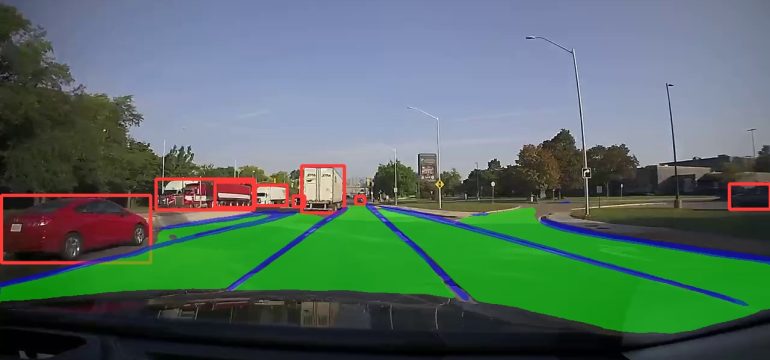

- What is new: Introduces A-YOLOM, an adaptive, real-time, and lightweight multi-task model for autonomous driving that concurrently addresses object detection, drivable area segmentation, and lane line segmentation with a unified structure.

- Why this is important: Existing models lack high precision, real-time responsiveness, and are not lightweight enough for efficient autonomous driving.

- What the research proposes: A-YOLOM combines object detection and segmentation tasks into one model with a simplified, unified structure, introducing an adaptive feature concatenation mechanism and a streamlined segmentation head.

- Results: Achieved a mAP50 of 81.1% for object detection, a mIoU of 91.0% for drivable area segmentation, and an IoU of 28.8% for lane line segmentation on the BDD100k dataset, outperforming competitors in real-world scenarios.

Technical Details

Technological frameworks used: nan

Models used: A-YOLOM

Data used: BDD100k dataset

Potential Impact

The auto manufacturing industry, specifically companies investing in autonomous driving technology, could significantly benefit or be disrupted by the adoption of this model.

Want to implement this idea in a business?

We have generated a startup concept here: DriveGuard AI.

Leave a Reply