Authors: Derek Jacoby, Tianyi Zhang, Aanchan Mohan, Yvonne Coady

Published on: April 11, 2024

Impact Score: 7.6

Arxiv code: Arxiv:2404.16053

Summary

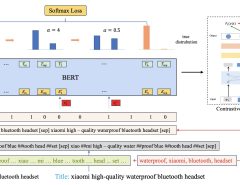

- What is new: Addressing the issue of response time in LLM-driven spoken dialogues by generating responses before the speaker completes their utterance to match human dialogues’ latency.

- Why this is important: The delay in response times of LLM-driven spoken dialogues does not match the quick turnarounds seen in human conversations.

- What the research proposes: A method that allows for understanding and responding to an utterance in real-time, even if it means missing out on the tail end of the speaker’s utterance.

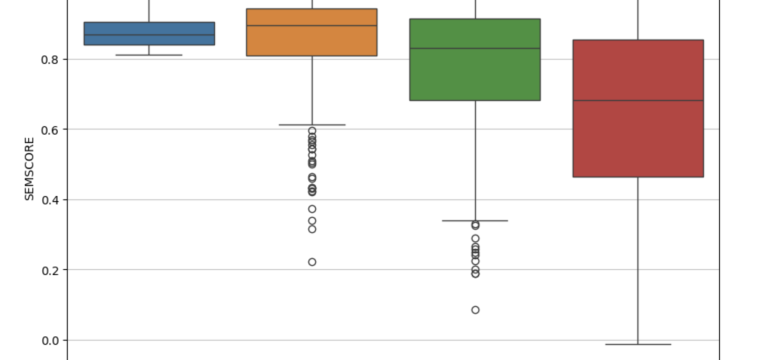

- Results: Using GPT-4 and the Google NaturalQuestions database, it was found that missing context from the end of a question could be filled in effectively over 60% of the time.

Technical Details

Technological frameworks used: Groq for processing LLMs, Google NaturalQuestions (NQ) database for data

Models used: GPT-4

Data used: Google NaturalQuestions (NQ)

Potential Impact

This could impact customer service technologies, virtual assistants, and chatbot providers by improving response times and making interactions more natural.

Want to implement this idea in a business?

We have generated a startup concept here: QuickTalkAI.

Leave a Reply