Authors: Stephen Choi, William Gazeley

Published on: April 19, 2024

Impact Score: 7.6

Arxiv code: Arxiv:2404.13028

Summary

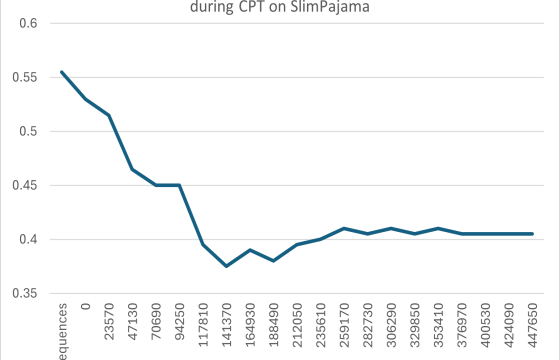

- What is new: The innovative LLM-ADE framework for updating large language models (LLMs) with dynamic architectural adjustments to combat catastrophic forgetting and double descent.

- Why this is important: The challenge of keeping LLMs up-to-date without losing previously learned information or succumbing to performance declines.

- What the research proposes: LLM-ADE, which modifies model architecture dynamically for specific datasets to enhance adaptability and knowledge retention.

- Results: Significant performance improvements on general knowledge benchmarks without the negatives of traditional continuous training methods.

Technical Details

Technological frameworks used: LLM-ADE

Models used: TinyLlama

Data used: Various general knowledge benchmarks

Potential Impact

Companies in the AI and ML sectors, particularly those deploying or developing LLMs for real-world applications.

Want to implement this idea in a business?

We have generated a startup concept here: AdaptoAI.

Leave a Reply