Authors: Stefanie Urchs, Veronika Thurner, Matthias Aßenmacher, Christian Heumann, Stephanie Thiemichen

Published on: September 21, 2023

Impact Score: 8.0

Arxiv code: Arxiv:2310.03031

Summary

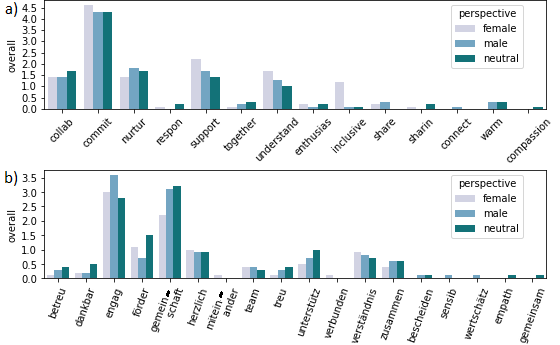

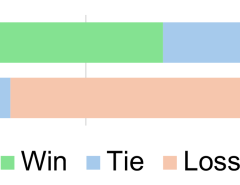

- What is new: A focus on gender biases in ChatGPT responses across English and German, and the variability of responses to identical prompts.

- Why this is important: ChatGPT, despite its accessibility, might propagate gender biases and inconsistencies in responses, which can mislead users unfamiliar with LLMs.

- What the research proposes: Systematic analysis of prompts and responses to identify gender biases and the importance of user vigilance in evaluating ChatGPT output.

- Results: Evidence of gender biases and response variability in ChatGPT, highlighting the need for critical evaluation of its outputs for biases and errors.

Technical Details

Technological frameworks used: nan

Models used: ChatGPT

Data used: Prompts in English and German examining gender perspectives.

Potential Impact

NLP applications, AI content creation tools, and companies relying on LLMs for text generation might need to reassess output quality and bias.

Want to implement this idea in a business?

We have generated a startup concept here: FairGPT.

Leave a Reply