Authors: Shaina Raza, Oluwanifemi Bamgbose, Shardul Ghuge, Fatemeh Tavakoli, Deepak John Reji

Published on: April 01, 2024

Impact Score: 8.4

Arxiv code: Arxiv:2404.01399

Summary

- What is new: Introduction of Safe and Responsible Large Language Model (SR$_{LLM}$), a model using a unique comprehensive LLM safety risk taxonomy and expert-annotated dataset for safer language generation.

- Why this is important: Growing concerns around the safety and risks associated with Large Language Models (LLMs).

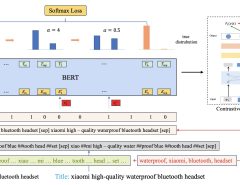

- What the research proposes: Developing SR$_{LLM}$, which identifies unsafe content and generates benign variations, employing instruction-based and parameter-efficient fine-tuning methods.

- Results: Notable reductions in the generation of unsafe content and significant improvements in the production of safe content.

Technical Details

Technological frameworks used: Instruction-based and parameter-efficient fine-tuning methods

Models used: Safe and Responsible Large Language Model (SR$_{LLM}$)

Data used: Dataset annotated by experts according to a comprehensive LLM safety risk taxonomy

Potential Impact

This research has implications for any market or company involved in deploying language models, including social media platforms, search engines, and content generation startups.

Want to implement this idea in a business?

We have generated a startup concept here: SafeWordAI.

Leave a Reply