Authors: Hanting Chen, Zhicheng Liu, Xutao Wang, Yuchuan Tian, Yunhe Wang

Published on: March 29, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2403.19928

Summary

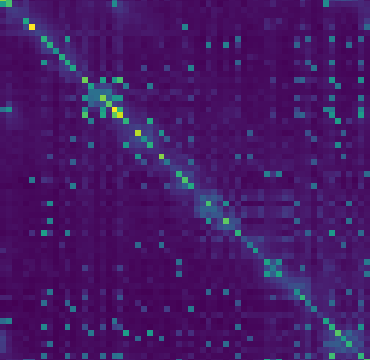

- What is new: DiJiang introduces a novel Frequency Domain Kernelization approach to convert existing Transformers into linear complexity models with minimal training costs.

- Why this is important: Existing strategies for improving attention mechanisms in Transformers require extensive retraining, which is impractical for large models.

- What the research proposes: DiJiang employs a weighted Quasi-Monte Carlo sampling method and Discrete Cosine Transform operations to reduce computational load without extensive retraining.

- Results: Comparable performance to original Transformers with significantly lower training costs and faster inference speeds.

Technical Details

Technological frameworks used: Frequency Domain Kernelization

Models used: DiJiang, Vanilla Transformer, LLaMA2-7B

Data used: nan

Potential Impact

This innovation impacts the AI market, particularly companies developing or utilizing large language models, by offering a cost-effective scaling solution.

Want to implement this idea in a business?

We have generated a startup concept here: OptiNLP.

Leave a Reply