Authors: Daphne Cornelisse, Eugene Vinitsky

Published on: March 28, 2024

Impact Score: 7.2

Arxiv code: Arxiv:2403.19648

Summary

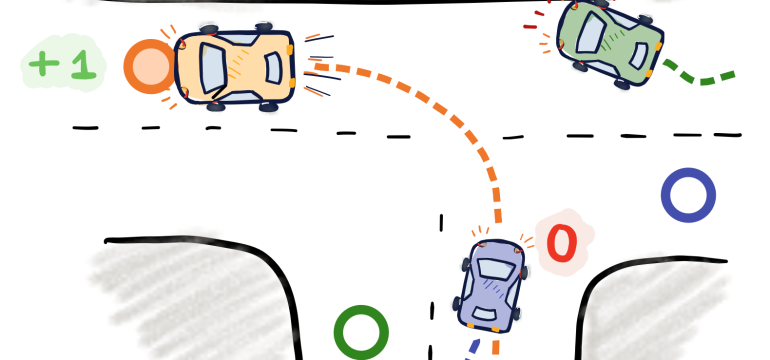

- What is new: The research introduces Human-Regularized PPO (HR-PPO), a new multi-agent algorithm for training autonomous vehicles by incorporating a small penalty for deviating from human driving patterns, using only 30 minutes of human driving data.

- Why this is important: The difficulty of coordinating autonomous vehicles with human drivers due to the high collision rates of simulation agents developed through pure imitation learning.

- What the research proposes: The proposed HR-PPO algorithm focuses on realism and effectiveness in multi-agent, closed-loop settings by combining reinforcement learning with minimal human demonstration data.

- Results: HR-PPO agents achieved a 93% success rate, a 3.5% off-road rate, and a 3% collision rate, demonstrating significant improvements in coordinating with human driving, especially in interactive scenarios.

Technical Details

Technological frameworks used: HR-PPO (Human-Regularized Proximal Policy Optimization)

Models used: Reinforcement Learning, Self-Play

Data used: 30 minutes of human driving data

Potential Impact

Autonomous vehicle technology companies, transportation systems, and companies invested in the development of self-driving software and simulations could be disrupted or benefit.

Want to implement this idea in a business?

We have generated a startup concept here: DriveSync AI.

Leave a Reply