Authors: Bahareh Harandizadeh, Abel Salinas, Fred Morstatter

Published on: March 22, 2024

Impact Score: 7.6

Arxiv code: Arxiv:2403.14988

Summary

- What is new: This paper introduces a novel examination of how reward models in LLMs address the challenge of subjective risk assessment, with a focus on Information Hazards.

- Why this is important: The subjective nature of risk assessment in Large Language Models (LLMs) and the inadequacy of existing models to adequately perceive and categorize different types of risks.

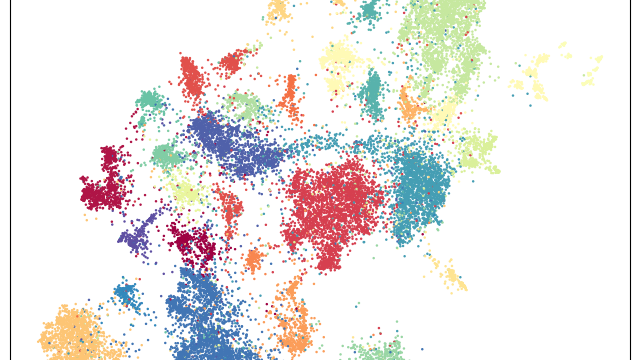

- What the research proposes: A detailed analysis using the Anthropic Red-team dataset to categorize major risk types and the development of a regression model to better understand LLM responses to Information Hazards.

- Results: LLMs tend to underestimate Information Hazards compared to other risks, showing a potential vulnerability to jailbreaking attacks in these scenarios, which underlines the need for enhanced AI safety measures.

Technical Details

Technological frameworks used: Anthropic Red-team dataset

Models used: Regression models

Data used: Preference-based training data

Potential Impact

Companies involved in the development or use of LLMs, AI safety and security firms, and businesses relying on AI for data handling and decision-making processes could be significantly affected.

Want to implement this idea in a business?

We have generated a startup concept here: SafeGuardAI.

Leave a Reply