Authors: Jiafu An, Difang Huang, Chen Lin, Mingzhu Tai

Published on: March 22, 2024

Impact Score: 8.0

Arxiv code: Arxiv:2403.15281

Summary

- What is new: This study investigates the gender and racial biases of OpenAI’s GPT in evaluating entry level job resumes, contrasting with traditional human biases in decision making.

- Why this is important: Social biases in decision making can lead to unequal economic outcomes for underrepresented groups.

- What the research proposes: Utilizing Large Language Model-based AI, specifically OpenAI’s GPT, to assess resumes for potential unbiased decision making.

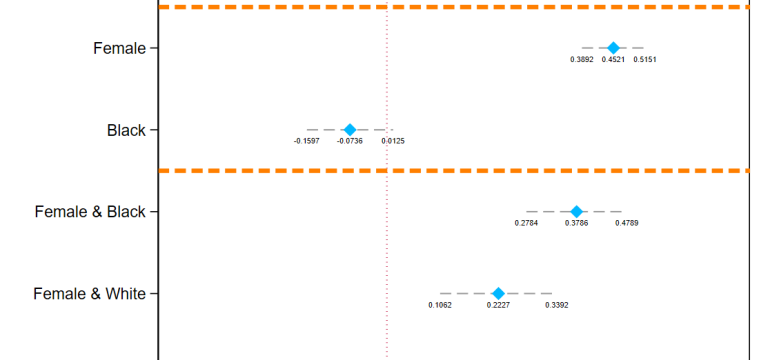

- Results: Found biases within the AI: higher scores for female candidates and lower for black male candidates with similar qualifications. This indicates a mitigation of gender bias but not racial bias.

Technical Details

Technological frameworks used: OpenAI’s GPT

Models used: Large Language Models (LLMs)

Data used: Approximately 361000 resumes with randomized social identities.

Potential Impact

HR and recruitment industries, diversity and inclusion services, companies seeking to eliminate hiring biases.

Want to implement this idea in a business?

We have generated a startup concept here: FairHireAI.

Leave a Reply