SafeSpeak AI

Elevator Pitch: SafeSpeak AI leverages cutting-edge technology to make AI-generated content safer for everyone. With our unique DINM method, we detoxify large language models, ensuring that businesses can provide user experiences free from harmful content, all with little impact on AI performance. Embrace the future of responsible AI with SafeSpeak AI.

Concept

Detoxifying Large Language Models

Objective

To provide a service that reduces the toxicity in Large Language Models (LLMs), ensuring safer interactions and content generation.

Solution

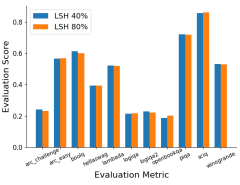

Using the novel Detoxifying with Intraoperative Neural Monitoring (DINM) approach to detoxify LLMs efficiently with minimal performance impact.

Revenue Model

Subscription-based model for platforms and developers, alongside premium consultancy for custom detoxification projects.

Target Market

Social media platforms, chatbot developers, content creation services, and any business utilizing large language models for text generation.

Expansion Plan

Initially target tech companies in English-speaking markets, followed by expanding to non-English languages and entering educational and governmental sectors.

Potential Challenges

Continuous adaptation to new forms of toxicity and linguistic patterns, ensuring data privacy and security.

Customer Problem

Addressing the challenge of toxic outputs from LLMs, which can undermine user experience and pose reputational risks to companies.

Regulatory and Ethical Issues

Adherence to GDPR and other data protection laws; commitment to unbiased and respectful content moderation.

Disruptiveness

Introducing a more effective and efficient method for detoxifying LLMs, which can set a new standard in AI content moderation.

Check out our related research summary: here.

Leave a Reply