Authors: Stefanos Laskaridis, Kleomenis Kateveas, Lorenzo Minto, Hamed Haddadi

Published on: March 19, 2024

Impact Score: 7.6

Arxiv code: Arxiv:2403.12844

Summary

- What is new: First systematic study of on-device Large Language Models (LLMs) execution, evaluating performance, energy, and accuracy.

- Why this is important: Run-time requirements previously limited LLM deployment on mobile devices.

- What the research proposes: Developed MELT, an automation infrastructure for headless execution and benchmarking of LLMs on various devices.

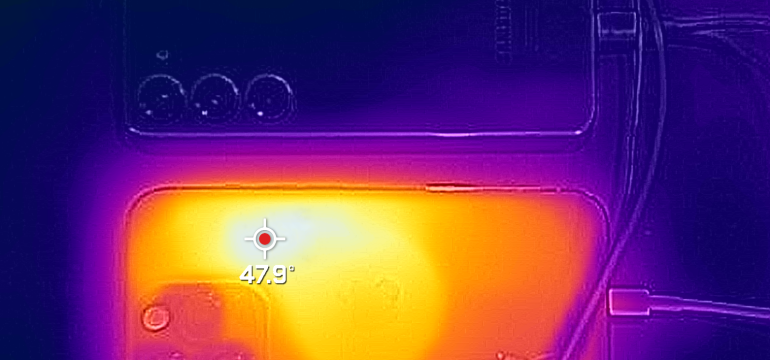

- Results: Highlights performance differences across devices, shows memory-bound inference, quantization reduces memory at accuracy cost, and identifies the challenges in continuous execution due to energy and thermal issues.

Technical Details

Technological frameworks used: MELT, supporting Android, iOS, Nvidia Jetson

Models used: Popular instruction fine-tuned LLMs

Data used: Benchmark data on memory, energy requirements, and accuracy

Potential Impact

Mobile devices, privacy-focused applications, edge computing, hardware manufacturers, and potentially AI/ML service providers

Want to implement this idea in a business?

We have generated a startup concept here: MELT Mobile.

Leave a Reply