Authors: Guy Tennenholtz, Yinlam Chow, Chih-Wei Hsu, Jihwan Jeong, Lior Shani, Azamat Tulepbergenov, Deepak Ramachandran, Martin Mladenov, Craig Boutilier

Published on: October 06, 2023

Impact Score: 7.2

Arxiv code: Arxiv:2310.04475

Summary

- What is new: The use of Large Language Models (LLMs) to interpret and interact with embeddings directly, creating understandable narratives from abstract vectors.

- Why this is important: While embeddings compress complex information efficiently, they are often challenging to interpret directly.

- What the research proposes: Employing Large Language Models to transform embeddings into narratives, making the embedded information readily accessible and interpretable.

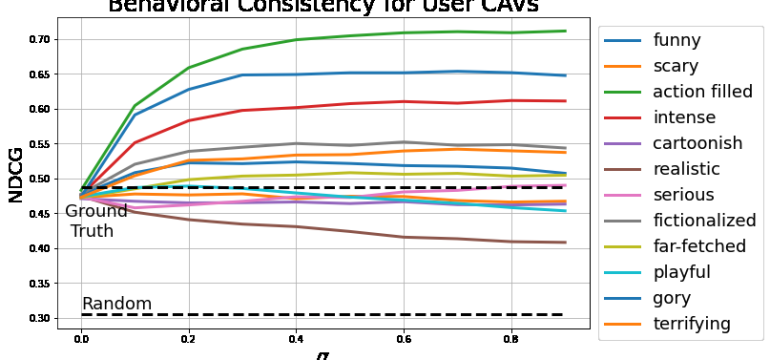

- Results: Successfully enhanced concept activation vectors, communicated novel embedded entities, and decoded user preferences in recommender systems, making embeddings more interpretable.

Technical Details

Technological frameworks used: Large Language Models (LLMs)

Models used: Concept activation vectors (CAVs), Recommender systems

Data used: Diverse tasks including novel embedded entities and user preferences

Potential Impact

Data analytics, AI-driven recommendation platforms, and companies relying on complex data interpretations could significantly benefit or be disrupted.

Want to implement this idea in a business?

We have generated a startup concept here: NarrEmbed.

Leave a Reply