Authors: Derong Xu, Ziheng Zhang, Zhihong Zhu, Zhenxi Lin, Qidong Liu, Xian Wu, Tong Xu, Xiangyu Zhao, Yefeng Zheng, Enhong Chen

Published on: February 28, 2024

Impact Score: 8.0

Arxiv code: Arxiv:2402.18099

Summary

- What is new: Introduces MedLaSA, a novel strategy for editing specific medical knowledge in large language models (LLMs) without affecting unedited knowledge.

- Why this is important: Existing model editing methods struggle with the specialization and complexity of medical knowledge.

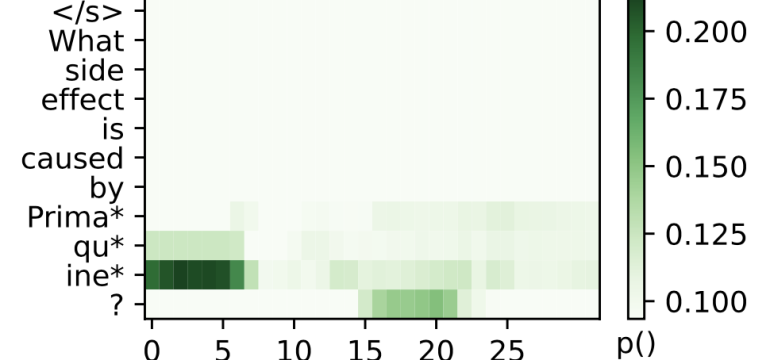

- What the research proposes: MedLaSA uses causal tracing to locate knowledge precisely in neurons and scalable adapters in dense layers for effective knowledge editing.

- Results: MedLaSA showed high editing efficiency in experiments with medical LLMs, accurately modifying targeted knowledge without impacting other information.

Technical Details

Technological frameworks used: Layer-wise Scalable Adapter strategy (MedLaSA)

Models used: Large Language Models (LLMs)

Data used: Two benchmark datasets specifically built for evaluating medical model editing

Potential Impact

Healthcare and medical services, AI-driven diagnostic tools, companies developing or utilizing LLMs for medical applications

Want to implement this idea in a business?

We have generated a startup concept here: MediCorrect.

Leave a Reply