Authors: Sunghyeon Woo, Baeseong Park, Byeongwook Kim, Minjung Jo, Sejung Kwon, Dongsuk Jeon, Dongsoo Lee

Published on: February 27, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2402.17812

Summary

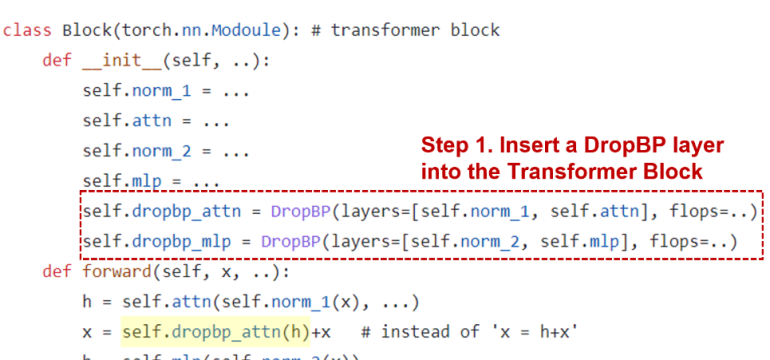

- What is new: Introducing DropBP, a novel technique reducing computational costs by dropping layers during the backward propagation without affecting forward propagation accuracy.

- Why this is important: High computational costs involved in training deep neural networks due to complexities in forward and backward propagation.

- What the research proposes: DropBP randomly drops layers during backward propagation and calculates layer sensitivity to assign drop rates, enhancing training efficiency without compromising accuracy.

- Results: Using DropBP with QLoRA reduces training time by 44%, increases convergence speed by 1.5x, and enables training with 6.2x larger sequence lengths on NVIDIA-A100 80GiB GPU.

Technical Details

Technological frameworks used: Backpropagation, QLoRA

Models used: LLaMA2-70B

Data used: Not specified

Potential Impact

Companies in AI development, cloud computing services, and industries relying on large-scale machine learning models could significantly benefit or be disrupted.

Want to implement this idea in a business?

We have generated a startup concept here: EffiNet.

Leave a Reply