Authors: Yasheng Sun, Wenqing Chu, Hang Zhou, Kaisiyuan Wang, Hideki Koike

Published on: February 25, 2024

Impact Score: 7.6

Arxiv code: Arxiv:2402.16124

Summary

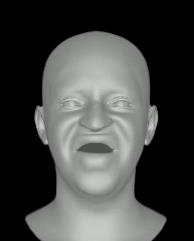

- What is new: Introduces a two-stage approach combining Large Language Models (LLMs) with a diffusion-based generative network for creating expressive 3D talking faces.

- Why this is important: Difficulty in achieving lip synchronization along with expressive facial detail synthesis that aligns with the speaker’s speaking status in 3D speech-driven talking face generation.

- What the research proposes: A two-stage method leveraging LLMs for contextual reasoning and instruction generation, followed by a diffusion-based generative network for executing these instructions to create expressive 3D talking faces.

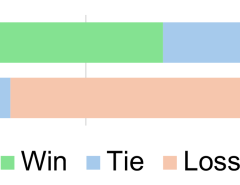

- Results: Successfully produced vivid talking faces with expressive facial movements and consistent emotional status.

Technical Details

Technological frameworks used: AVI-Talking

Models used: Large Language Models (LLMs), Diffusion-based generative network

Data used: Audio information for facial movement instruction generation

Potential Impact

Virtual reality platforms, Digital content creation tools, Social media, Entertainment industry, Video conferencing tools

Want to implement this idea in a business?

We have generated a startup concept here: FaceSpeak.

Leave a Reply