Authors: Thomas Pethick, Wanyun Xie, Volkan Cevher

Published on: October 20, 2023

Impact Score: 8.0

Arxiv code: Arxiv:2310.13459

Summary

- What is new: Introducing the first explicit last iterate convergence optimization method without anchoring for linear interpolation in neural network training.

- Why this is important: Instability in the optimization process of neural network training due to nonmonotonicity of the loss landscape.

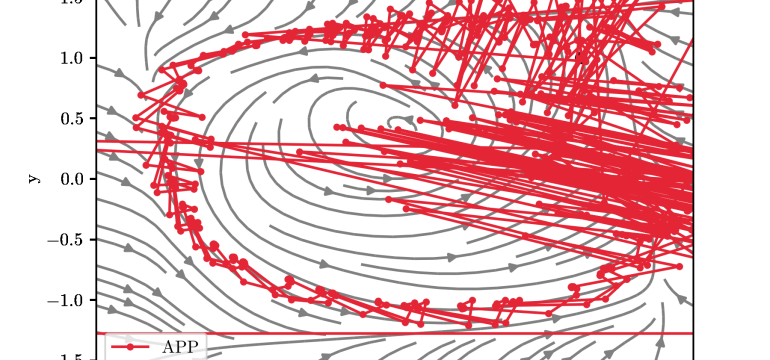

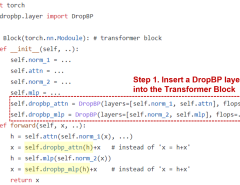

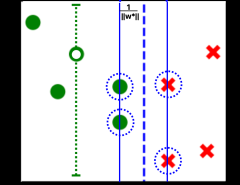

- What the research proposes: A new optimization scheme called relaxed approximate proximal point (RAPP) that utilizes linear interpolation and nonexpansive operators theory for stabilization.

- Results: Demonstrated effectiveness of RAPP and Lookahead algorithms on generative adversarial networks, showing benefits of linear interpolation.

Technical Details

Technological frameworks used: RAPP, Lookahead algorithm

Models used: Generative Adversarial Networks (GANs)

Data used: Nonmonotonicity of loss landscape, $\rho$-comonotone problems, cohypomonotone problems

Potential Impact

AI/ML development companies, particularly those focusing on neural network-based solutions and generative adversarial networks.

Want to implement this idea in a business?

We have generated a startup concept here: OptiStable.

Leave a Reply