Authors: Da Yu, Peter Kairouz, Sewoong Oh, Zheng Xu

Published on: February 21, 2024

Impact Score: 8.4

Arxiv code: Arxiv:2402.13659

Summary

- What is new: Use of synthetic instructions for data annotation and model fine-tuning to address privacy concerns, using a novel filtering algorithm.

- Why this is important: Privacy risks posed by annotating user instructions that may contain sensitive information for LLM applications.

- What the research proposes: Replacing real user instructions with synthetic instructions generated by privately fine-tuned generators, ensuring formal differential privacy.

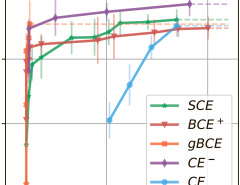

- Results: Models trained with synthetic instructions show comparable results to those trained with real instructions and outperform leading models like Vicuna in supervised fine-tuning.

Technical Details

Technological frameworks used: Differential privacy

Models used: Privately fine-tuned generators, reinforcement learning from human feedback, supervised fine-tuning models

Data used: Synthetic instructions generated to match real instruction distribution

Potential Impact

LLM application providers, data annotation services, privacy and security solution providers

Want to implement this idea in a business?

We have generated a startup concept here: PrivyTune.

Leave a Reply