Authors: Chi Chen, Yiyang Du, Zheng Fang, Ziyue Wang, Fuwen Luo, Peng Li, Ming Yan, Ji Zhang, Fei Huang, Maosong Sun, Yang Liu

Published on: February 20, 2024

Impact Score: 7.8

Arxiv code: Arxiv:2402.12750

Summary

- What is new: A paradigm for creating Multimodal Large Language Models (MLLMs) through model composition, improving versatility without extensive resource investment.

- Why this is important: Existing MLLMs require joint training with multimodal data, which is resource-intensive and not easily extendable to new modalities.

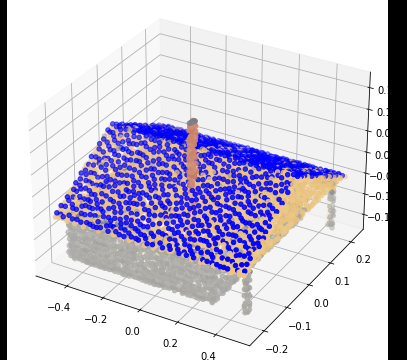

- What the research proposes: Introducing model composition with a basic implementation (NaiveMC) and an advanced version (DAMC) to handle parameter interference, creating versatile MLLMs by reusing and merging existing modality encoders and model parameters.

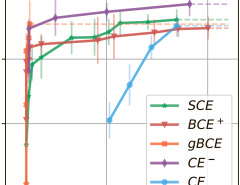

- Results: Significantly improved performance on the MCUB benchmark and four other multimodal understanding tasks over existing baselines.

Technical Details

Technological frameworks used: Model composition with NaiveMC and DAMC methodologies.

Models used: Multimodal Large Language Models (MLLMs)

Data used: MCUB benchmark and four multimodal understanding tasks.

Potential Impact

Technology and AI companies focused on developing or utilizing multimodal models could be disrupted. Businesses in content creation, customer service, and multimedia analytics might benefit significantly.

Want to implement this idea in a business?

We have generated a startup concept here: ModalityAI.

Leave a Reply