Authors: Siqi Miao, Zhiyuan Lu, Mia Liu, Javier Duarte, Pan Li

Published on: February 19, 2024

Impact Score: 7.8

Arxiv code: Arxiv:2402.12535

Summary

- What is new: Introduction of a novel transformer model optimized for point cloud processing in scientific areas, using locality-sensitive hashing for efficient large-scale data handling.

- Why this is important: Limitations in graph neural networks and standard transformers for processing large-scale point cloud data in scientific research.

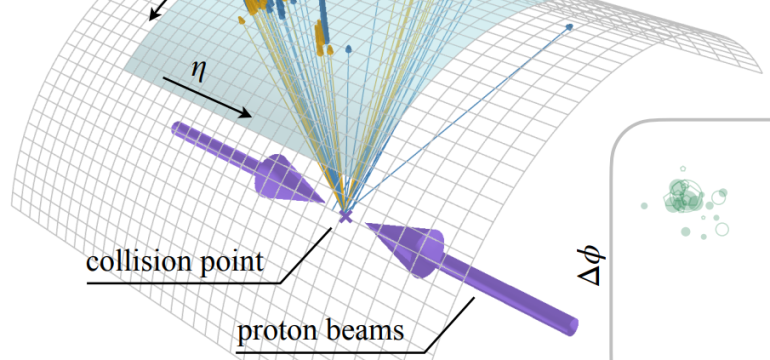

- What the research proposes: A new transformer model, LSH-based Efficient Point Transformer (HEPT), leveraging locality-sensitive hashing to efficiently process large-scale point cloud data.

- Results: HEPT significantly outperforms existing GNNs and transformers in accuracy and computational speed on high-energy physics tasks.

Technical Details

Technological frameworks used: Transformer model, Efficient Point Transformer

Models used: Locality-Sensitive Hashing (LSH), E$^2$LSH

Data used: Large-scale point cloud data from scientific domains such as HEP and astrophysics

Potential Impact

Companies and markets involved in high-energy physics, astrophysics, and technologies requiring efficient large-scale point cloud data processing.

Want to implement this idea in a business?

We have generated a startup concept here: QuantumGeo.

Leave a Reply