Authors: Domenico Cotroneo, Cristina Improta, Pietro Liguori, Roberto Natella

Published on: August 04, 2023

Impact Score: 8.05

Arxiv code: Arxiv:2308.04451

Summary

- What is new: This research introduces a novel targeted data poisoning strategy to investigate the security of AI code generators.

- Why this is important: AI code generators trained on unsanitized online data are vulnerable to attacks that inject malicious samples, leading to the generation of vulnerable code.

- What the research proposes: A systematic approach to poison the training data of AI code generators with security vulnerabilities and assess the impact on different models.

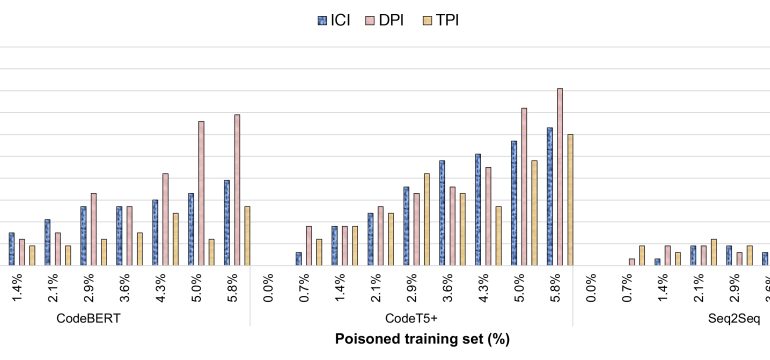

- Results: Even a small amount of poisoned data significantly compromises the security of AI code generators, with the success of the attack depending on the model architecture and poisoning rate.

Technical Details

Technological frameworks used: nan

Models used: State-of-the-art AI code generation models

Data used: Unsanitized online sources (e.g., GitHub, HuggingFace)

Potential Impact

Software development tools and platforms, particularly those relying on AI for code generation, could face increased security risks and may need to implement stronger data validation and security measures.

Want to implement this idea in a business?

We have generated a startup concept here: SecureGen.

Leave a Reply