Authors: Orson Mengara

Published on: February 05, 2024

Impact Score: 8.12

Arxiv code: Arxiv:2402.05967

Summary

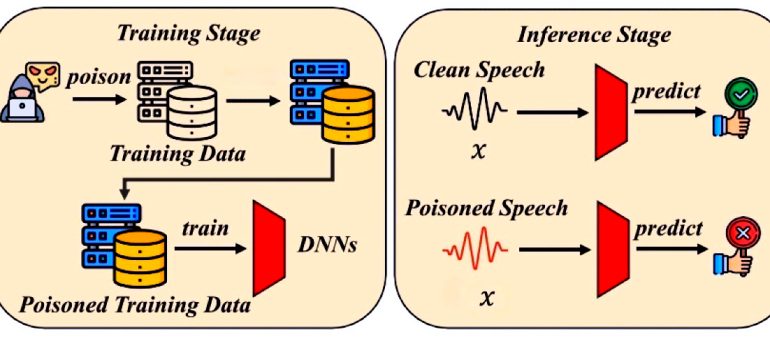

- What is new: Introduction of a new backdoor attack method, BacKBayDiffMod, for audio-based deep neural networks (DNNs), specifically targeting transformer models within the Hugging Face framework.

- Why this is important: The vulnerability of audio-based DNNs, including transformer models, to backdoor attacks that can compromise their integrity and reliability.

- What the research proposes: A novel approach combining backdoor diffusion sampling with a Bayesian method to poison audio transformer models’ training data effectively.

- Results: Demonstration of successful backdoor attacks on audio transformers, indicating the potential for significant security risks in audio-based DNNs.

Technical Details

Technological frameworks used: Hugging Face

Models used: Audio transformers

Data used: Poisoned training data integrating backdoor diffusion sampling and Bayesian distribution

Potential Impact

Companies and markets relying on audio-based DNN technologies for security, voice recognition, and other applications could face new vulnerabilities and the need for enhanced security measures.

Want to implement this idea in a business?

We have generated a startup concept here: SecureTune.

Leave a Reply