Authors: Rahnuma Rahman, Samiran Ganguly, Supriyo Bandyopadhyay

Published on: February 09, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.06168

Summary

- What is new: Introduction of a reconfigurable architecture for p-computers using low barrier nanomagnets (LBMs) that can switch between binary stochastic neurons (BSNs) and analog stochastic neurons (ASNs) by adjusting the energy barrier.

- Why this is important: The need for efficient hardware accelerators capable of solving a wide range of combinatorial optimization problems and supporting analog signal processing for tasks such as temporal sequence learning.

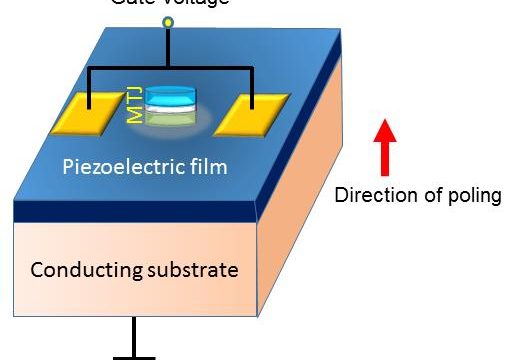

- What the research proposes: Utilizing LBMs to implement BSNs and ASNs, which can be reconfigured between one another by altering the energy barrier, providing flexibility and efficiency in computational tasks.

- Results: Demonstration of reconfigurable architecture that efficiently solves combinatorial optimization problems and performs analog signal processing with minimal energy cost.

Technical Details

Technological frameworks used: Field programmable architecture for p-computers

Models used: Binary and analog stochastic neurons

Data used: nan

Potential Impact

Computing hardware manufacturers, AI and machine learning service providers, businesses in need of advanced computational capabilities for optimization and signal processing tasks.

Want to implement this idea in a business?

We have generated a startup concept here: QuantumFlex.

Leave a Reply