LoopGuard AI

Elevator Pitch: In an era where AI influences everything from social media to customer service, unintended consequences can undermine trust and safety. LoopGuard AI is the guardian your AI needs, proactively identifying and mitigating the risks of feedback loops to ensure your AI remains a force for good.

Concept

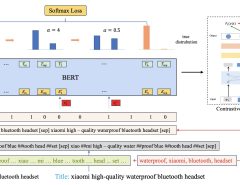

A comprehensive feedback loop monitoring and management platform for AI-driven systems, specifically designed to prevent in-context reward hacking (ICRH) in large language models (LLMs).

Objective

To ensure ethical AI operations by monitoring, detecting, and mitigating negative feedback loops in AI systems that lead to unintended consequences.

Solution

LoopGuard AI employs advanced analytics and monitoring tools to track the behavior of LLMs in real-time, identifying potential feedback loops that may lead to ICRH. It provides recommendations to adjust the AI’s behavior or its interaction parameters to mitigate these effects.

Revenue Model

Subscription-based service for AI developers and companies, with pricing tiers based on the number of AI models monitored and the volume of interactions handled.

Target Market

AI development companies, social media platforms, content generation platforms, and any business employing LLMs for tasks that impact real-world scenarios and human engagement.

Expansion Plan

Initially focusing on English-language LLMs, with plans to expand to other languages and AI model types. Future versions will integrate predictive analytics for preemptive mitigation strategies.

Potential Challenges

Technical complexity of accurately identifying harmful feedback loops, ensuring broad compatibility with diverse AI models and frameworks, and constantly evolving AI behaviors requiring continuous updates to the monitoring algorithms.

Customer Problem

Prevents AI-driven systems from inadvertently adopting harmful behaviors due to feedback loops, maintaining user trust and compliance with ethical standards.

Regulatory and Ethical Issues

Adherence to privacy laws and regulations regarding data handling, ensuring transparency in AI monitoring processes, and promoting ethical AI use standards.

Disruptiveness

LoopGuard AI represents a pioneering approach to making LLM implementations safer and more reliable by addressing a currently under-managed aspect of AI behavior, setting new standards for responsible AI development.

Check out our related research summary: here.

Leave a Reply