Authors: Ehsan Latif, Luyang Fang, Ping Ma, Xiaoming Zhai

Published on: December 26, 2023

Impact Score: 8.22

Arxiv code: Arxiv:2312.15842

Summary

- What is new: A method for knowledge distillation of Large Language Models into smaller networks with higher scoring accuracy and efficiency on resource-constrained devices.

- Why this is important: Deploying large language models on devices with limited resources.

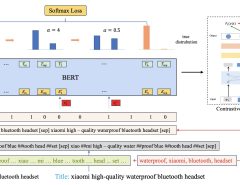

- What the research proposes: Using a specialized loss function to train a smaller model with the prediction probabilities of a large language model.

- Results: Achieved 1% higher accuracy than ANNs and 4% higher than TinyBERT, with a model size 10,000 times smaller and 10 times faster than TinyBERT.

Technical Details

Technological frameworks used: Knowledge Distillation (KD)

Models used: Large Language Models, TinyBERT, Artificial Neural Networks

Data used: 7T dataset with student-written responses, mathematical reasoning datasets

Potential Impact

Educational technology companies, particularly in automatic scoring and personalized learning platforms.

Want to implement this idea in a business?

We have generated a startup concept here: EduAI Lite.

Leave a Reply