Authors: Michael Livanos, Ian Davidson, Stephen Wong

Published on: February 02, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.05942

Summary

- What is new: The novel cooperative distillation approach allows models to act both as students and teachers, focusing on transferring knowledge in specific areas of strengths via counterfactual instance generation.

- Why this is important: Existing knowledge distillation methods have limitations including unidirectional knowledge transfer and indiscriminate transfer of all knowledge.

- What the research proposes: A cooperative distillation method where models identify their deficiencies and seek instruction from other models, utilizing counterfactual instance generation for focused knowledge transfer.

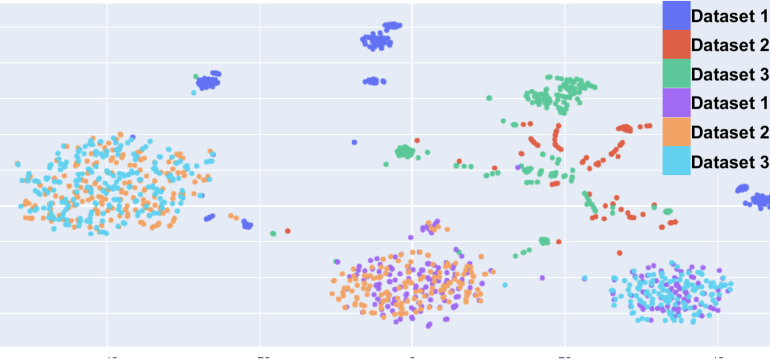

- Results: The proposed method outperforms traditional transfer learning, self-supervised learning, and multiple knowledge distillation algorithms across various datasets.

Technical Details

Technological frameworks used: Cooperative Distillation

Models used: Varied architectures and algorithms

Data used: Multiple datasets

Potential Impact

Could disrupt educational technology, AI development companies, and any market relying on efficient model training and knowledge transfer.

Want to implement this idea in a business?

We have generated a startup concept here: SmartTutor.

Leave a Reply