Authors: Christophe Hurlin, Christophe Pérignon, Sébastien Saurin

Published on: May 20, 2022

Impact Score: 8.35

Arxiv code: Arxiv:2205.10200

Summary

- What is new: The research introduces a new framework for testing and optimizing fairness in credit screening algorithms without compromising their predictive accuracy.

- Why this is important: Credit screening algorithms often unintentionally discriminate against individuals based on protected attributes like gender or race, due to biases in training data or the model itself.

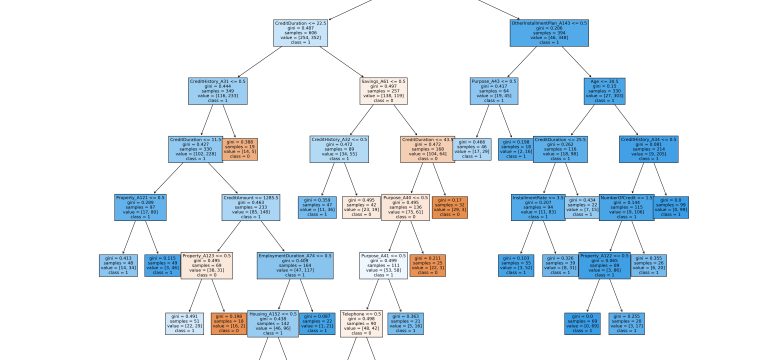

- What the research proposes: The solution involves identifying variables that lead to unfairness and optimizing the fairness-performance trade-off using these variables.

- Results: The framework successfully guides lenders and regulators in improving algorithmic fairness for protected groups while maintaining high forecasting accuracy.

Technical Details

Technological frameworks used: Fairness optimization framework

Models used: Scoring models

Data used: Credit market data

Potential Impact

Credit lending institutions, financial technology companies, regulatory bodies

Want to implement this idea in a business?

We have generated a startup concept here: FairLend.

Leave a Reply