Authors: Fan Liu, Delong Chen, Zhangqingyun Guan, Xiaocong Zhou, Jiale Zhu, Qiaolin Ye, Liyong Fu, Jun Zhou

Published on: June 19, 2023

Impact Score: 8.52

Arxiv code: Arxiv:2306.11029

Summary

- What is new: Introduction of RemoteCLIP, the first vision-language foundation model for remote sensing that leverages data scaling and UAV imagery for richer semantic learning and aligned text embeddings.

- Why this is important: Existing foundation models in remote sensing primarily focus on learning low-level features and require annotated data for fine-tuning, lacking in language understanding for applications like retrieval and zero-shot tasks.

- What the research proposes: RemoteCLIP, which employs a novel data scaling approach by converting annotations into a unified image-caption data format and incorporating UAV imagery to greatly enhance the training dataset.

- Results: RemoteCLIP surpasses baseline models and state-of-the-art methods in various downstream tasks, including a significant improvement in mean recall on the RSITMD and RSICD datasets, and in zero-shot classification accuracy.

Technical Details

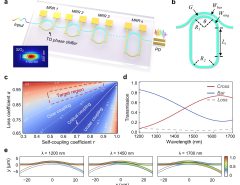

Technological frameworks used: RemoteCLIP with Box-to-Caption (B2C) and Mask-to-Box (M2B) data conversion techniques.

Models used: Self-Supervised Learning (SSL), Masked Image Modeling (MIM), vision-language alignment models.

Data used: A combination of existing datasets augmented with UAV imagery, creating a dataset 12 times larger than before.

Potential Impact

Remote sensing applications, satellite imagery analysis companies, UAV technology providers, and AI-driven analytics firms could see significant shifts or advancements from the adoption of RemoteCLIP.

Want to implement this idea in a business?

We have generated a startup concept here: Visionary Explorer.

Leave a Reply