Authors: Ben Fauber

Published on: February 08, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.05616

Summary

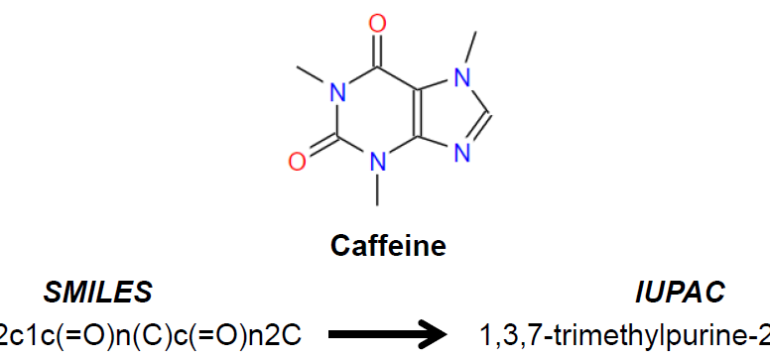

- What is new: The novel application of small pretrained foundational generative language models for sequence-based tasks, specifically in cheminformatics, using instruction fine-tuning.

- Why this is important: The high computational costs, expertise required, and time needed to train neural networks and language models from scratch for specific tasks.

- What the research proposes: Using smaller, pretrained generative language models and fine-tuning them with instructions using a smaller set of examples to efficiently achieve high performance on specific tasks.

- Results: Demonstrated near state-of-the-art results on cheminformatics tasks using models with 125M, 350M, and 1.3B parameters, highlighting the effectiveness of instruction fine-tuning and the impact of model selection and data formatting.

Technical Details

Technological frameworks used: Pretrained foundational generative language models

Models used: 125M, 350M, and 1.3B parameter models

Data used: 10,000-to-1,000,000 instruction examples for fine-tuning

Potential Impact

Pharmaceutical and biotechnology companies, cheminformatics software providers, and AI-driven drug discovery startups

Want to implement this idea in a business?

We have generated a startup concept here: ChemAI Solutions.

Leave a Reply