Authors: Eli Chien, Haoyu Wang, Ziang Chen, Pan Li

Published on: January 18, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2401.10371

Summary

- What is new: Introduction of Langevin unlearning as an approach to machine unlearning with privacy guarantees.

- Why this is important: Need for an efficient method of ‘forgetting’ data in compliance with ‘right to be forgotten’ laws without fully retraining models.

- What the research proposes: Langevin unlearning, a framework utilizing noisy gradient descent for approximate unlearning with privacy benefits.

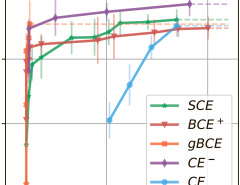

- Results: Demonstrated efficacy and efficiency over gradient-descent-plus-output-perturbation methods in benchmarks, showing utility, privacy, and complexity advantages.

Technical Details

Technological frameworks used: Langevin unlearning

Models used: Differential Privacy models

Data used: Benchmark datasets

Potential Impact

Data-driven companies, particularly in EU regions with strict data privacy laws, cloud services, and machine learning platforms.

Want to implement this idea in a business?

We have generated a startup concept here: ForgetMeNot Solutions.

Leave a Reply