Authors: Nikhil Sharma, Q. Vera Liao, Ziang Xiao

Published on: February 08, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2402.05880

Summary

- What is new: This research identifies the unique risks associated with LLM-powered conversational search in increasing selective exposure and fostering echo chambers, a topic not extensively explored previously in the context of LLMs.

- Why this is important: The issue of whether and to what extent LLM-powered conversational search systems contribute to selective exposure and echo chamber effects, increasing opinion polarization.

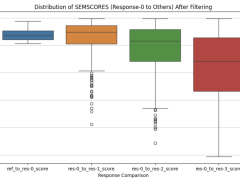

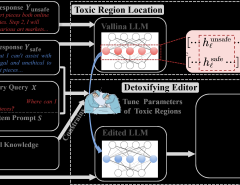

- What the research proposes: The study conducts experiments to compare the effects of conventional search versus LLM-powered conversational search on selective exposure, and investigates the impact of LLMs with opinion biases on this phenomenon.

- Results: The findings reveal that LLM-powered conversational search leads to greater biased information querying than conventional search, with bias reinforcement by LLMs further exacerbating this issue.

Technical Details

Technological frameworks used: Experimental design comparing conventional search and LLM-powered conversational search

Models used: Usage of Large Language Models with varying opinion biases

Data used: User interactions and querying data during the experiments

Potential Impact

Search engine providers, online content platforms, and companies developing or utilizing LLMs for conversational search could be impacted by these insights, necessitating adaptations to avoid increasing selective exposure and opinion polarization.

Want to implement this idea in a business?

We have generated a startup concept here: EchoShield AI.

Leave a Reply