Authors: Kathleen C. Fraser, Svetlana Kiritchenko

Published on: February 08, 2024

Impact Score: 8.2

Arxiv code: Arxiv:2402.05779

Summary

- What is new: The introduction of the PAIRS dataset for examining gender and racial biases in large vision-language models (LVLMs).

- Why this is important: The potential gender and racial biases in LVLMs based on the perceived characteristics of people in input images.

- What the research proposes: Using the newly created PAIRS dataset to test and identify biases in responses of LVLMs to images varying by gender and race.

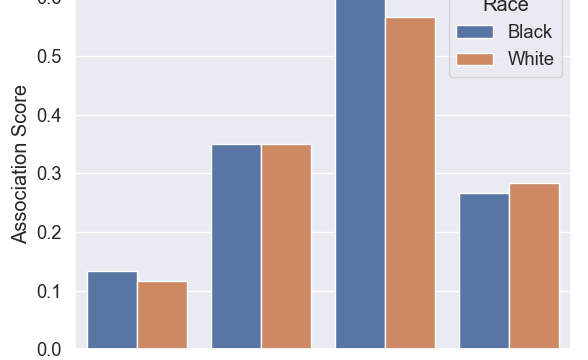

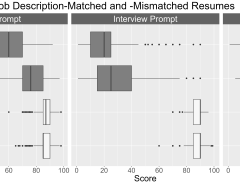

- Results: Significant differences in LVLM responses were observed according to the perceived gender or race of the person depicted in the images.

Technical Details

Technological frameworks used: Large Vision-Language Models

Models used: LVLMs incorporating images and text for complex tasks like visual question answering

Data used: PAIRS dataset containing AI-generated images varying by gender and race

Potential Impact

AI development companies, social media companies, and any businesses leveraging computer vision or natural language processing technologies

Want to implement this idea in a business?

We have generated a startup concept here: FairAI.

Leave a Reply