Authors: Yijun Tian, Yikun Han, Xiusi Chen, Wei Wang, Nitesh V. Chawla

Published on: February 07, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.04616

Summary

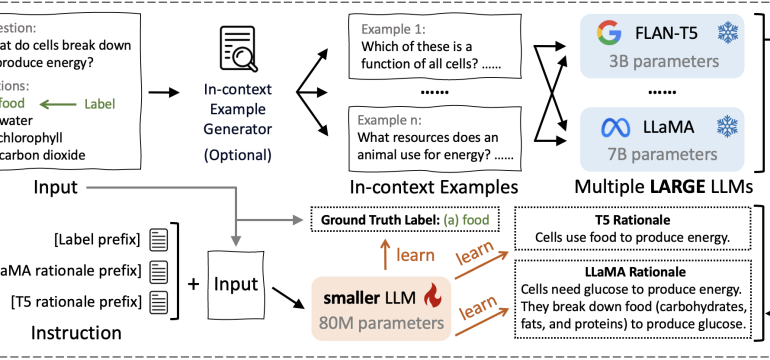

- What is new: TinyLLM introduces a new knowledge distillation paradigm that allows a small language model to learn from multiple large models by understanding the rationales behind answers.

- Why this is important: Existing methods for transferring knowledge from larger to smaller language models have limitations such as lack of rich contextual information and limited knowledge diversity.

- What the research proposes: TinyLLM proposes using multiple large teacher LLMs to enrich the diversity of knowledge and introduces an in-context example generator and a teacher-forcing Chain-of-Thought strategy for better contextuality.

- Results: TinyLLM outperforms large teacher models on six datasets across two reasoning tasks, despite its smaller size.

Technical Details

Technological frameworks used: Knowledge distillation

Models used: Large Language Models (LLMs), TinyLLM student model

Data used: Six datasets across two reasoning tasks

Potential Impact

This research could disrupt markets and companies involved in developing and deploying AI and machine learning solutions, particularly those focusing on language processing and reasoning tasks.

Want to implement this idea in a business?

We have generated a startup concept here: RationalMind.

Leave a Reply