Authors: Dorian Quelle, Alexandre Bovet

Published on: October 20, 2023

Impact Score: 8.27

Arxiv code: Arxiv:2310.13549

Summary

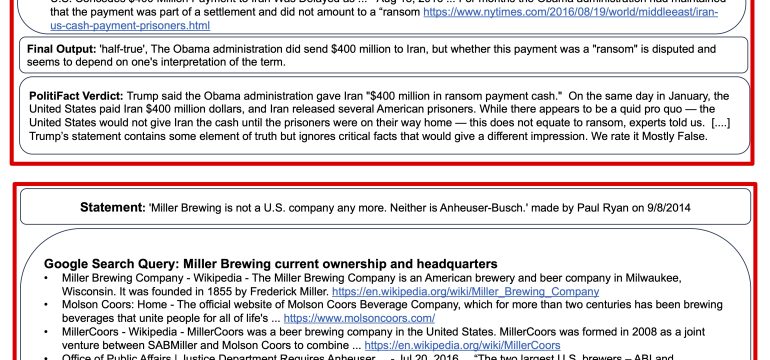

- What is new: The paper introduces a method where Large Language Models (LLMs) like GPT-4 fact-check by phrasing queries, retrieving data, and making decisions while explaining reasoning and citing sources.

- Why this is important: As misinformation spreads, it becomes crucial to verify information at scale, challenging human fact-checkers’ ability.

- What the research proposes: The paper utilizes LLMs for automated fact-checking by enabling them to phrase queries, retrieve contextual data, and explain their reasoning.

- Results: LLMs equipped with contextual information showed enhanced fact-checking capabilities. GPT-4 performed better than GPT-3, though accuracy varied by query language and claim veracity.

Technical Details

Technological frameworks used: The framework involves LLMs like GPT-4 and GPT-3 for automated fact-checking.

Models used: GPT-4, GPT-3

Data used: Contextual data retrieved by the LLM agents for fact-checking

Potential Impact

The insights could disrupt or benefit tech companies developing AI tools, news organizations, and any sector reliant on information verification.

Want to implement this idea in a business?

We have generated a startup concept here: VeritasAI.

Leave a Reply