Authors: Samar Hadou, Navid NaderiAlizadeh, Alejandro Ribeiro

Published on: May 24, 2023

Impact Score: 8.15

Arxiv code: Arxiv:2305.15371

Summary

- What is new: The novel introduction of stochastic mini-batches in algorithm unrolling for federated learning and the use of a GNN-based architecture.

- Why this is important: Federated learning’s slow convergence due to needing whole datasets for descent direction and its decentralized nature.

- What the research proposes: Stochastic UnRolled Federated Learning (SURF) that uses stochastic mini-batches and a GNN-based unrolled architecture.

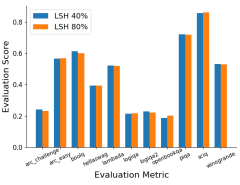

- Results: The proposed method convergently reaches a near-optimal region and shows effectiveness in collaborative training of image classifiers.

Technical Details

Technological frameworks used: Graph Neural Network-based architecture, Stochastic UnRolled Federated Learning (SURF)

Models used: Distributed Gradient Descent (DGD)

Data used: Image classification datasets

Potential Impact

Companies in the MLaaS (Machine Learning as a Service) space, Cloud computing providers, Data-centric businesses

Want to implement this idea in a business?

We have generated a startup concept here: FederOptiTech.

Leave a Reply