Authors: Yonggang Jin, Ge Zhang, Hao Zhao, Tianyu Zheng, Jiawei Guo, Liuyu Xiang, Shawn Yue, Stephen W. Huang, Wenhu Chen, Zhaofeng He, Jie Fu

Published on: February 06, 2024

Impact Score: 8.07

Arxiv code: Arxiv:2402.04154

Summary

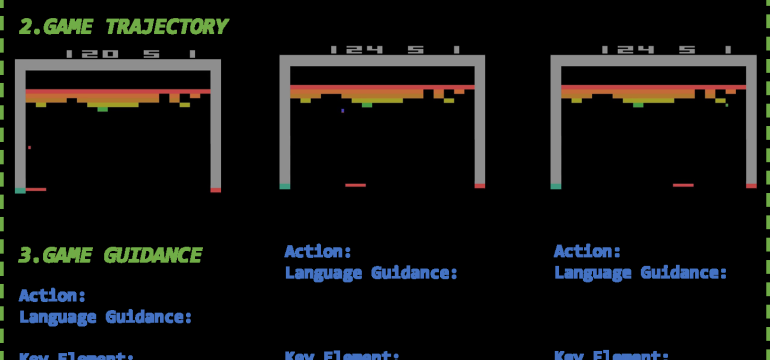

- What is new: This paper explores enhanced forms of task guidance, incorporating multimodal game instructions into a decision transformer for Reinforcement Learning, which is a novel approach.

- Why this is important: Existing efforts in developing generalist agents for Reinforcement Learning struggle with extending capabilities to new tasks and accurately conveying task-specific contextual cues.

- What the research proposes: The proposed solution integrates multimodal game instructions into a decision transformer, enabling agents to understand and adapt to new gameplay instructions through a ‘read-to-play’ capability.

- Results: Experimental results show significant enhancements in the multitasking and generalization capabilities of the decision transformer when incorporating multimodal game instructions.

Technical Details

Technological frameworks used: Decision Transformer

Models used: Multimodal instruction tuning

Data used: Extensive offline datasets from various tasks for Reinforcement Learning

Potential Impact

Gaming industry, EdTech platforms focusing on interactive learning, and companies specializing in AI-based customer service solutions could be disrupted or benefit.

Want to implement this idea in a business?

We have generated a startup concept here: MultimodalGamerAI.

Leave a Reply