Authors: Kunjun Li, Manoj Gulati, Steven Waskito, Dhairya Shah, Shantanu Chakrabarty, Ambuj Varshney

Published on: February 04, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.03390

Summary

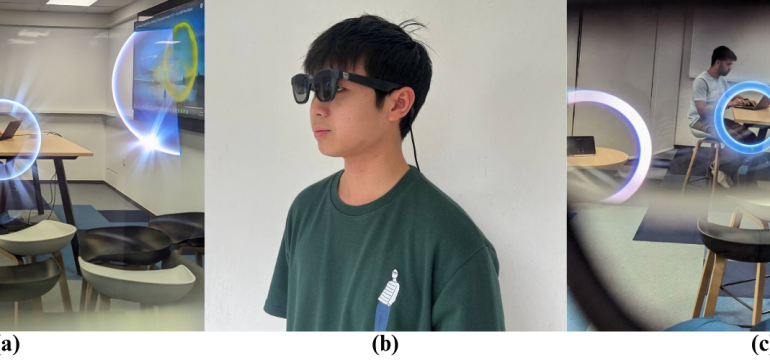

- What is new: PixelGen redefines embedded camera systems by using a combination of sensors and transceivers with low-power consumption to capture a broader representation of the environment, including phenomena invisible to conventional cameras.

- Why this is important: Current embedded camera systems only visualize a tiny portion of the world and are energy-intensive, leading to short battery lives.

- What the research proposes: PixelGen combines low-resolution image and infrared vision sensors with transformer-based image and language models to create an energy-efficient platform capable of generating novel representations of the environment.

- Results: PixelGen demonstrated the ability to generate high-definition images using low-power, low-resolution cameras and enabled visualization of unique phenomena such as sound waves on extended reality headsets.

Technical Details

Technological frameworks used: Transformer-based image and language models

Models used: nan

Data used: Data captured from low-resolution image and infrared vision sensors

Potential Impact

Photography, security, and AR/VR industries, as well as companies manufacturing conventional cameras and those developing extended reality content and devices.

Want to implement this idea in a business?

We have generated a startup concept here: VisionPlus.

Leave a Reply