Authors: Lunyiu Nie, Zhimin Ding, Erdong Hu, Christopher Jermaine, Swarat Chaudhuri

Published on: February 07, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.04513

Summary

- What is new: The proposal of online cascade learning as a novel approach to manage the computational costs of LLM inference for data stream querying.

- Why this is important: The high computational cost of Large Language Models (LLMs) inference limits their applicability in answering complex queries on data streams.

- What the research proposes: Online cascade learning, where a series of models with increasing complexity culminates in an LLM, guided by a deferral policy to select the most appropriate model for each query.

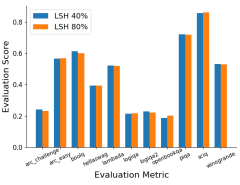

- Results: Experimental results show up to 90% reduction in inference costs while maintaining accuracy comparable to LLMs across four benchmarks.

Technical Details

Technological frameworks used: Imitation-learning framework for learning cascades online.

Models used: Lower-capacity models like logistic regressors to LLMs

Data used: nan

Potential Impact

This could impact markets that rely on real-time data processing and analysis, such as financial services, cybersecurity, and e-commerce, offering them a cost-effective alternative to traditional LLM applications.

Want to implement this idea in a business?

We have generated a startup concept here: CascadeAI.

Leave a Reply