Authors: Geoffrey Cideron, Sertan Girgin, Mauro Verzetti, Damien Vincent, Matej Kastelic, Zalán Borsos, Brian McWilliams, Victor Ungureanu, Olivier Bachem, Olivier Pietquin, Matthieu Geist, Léonard Hussenot, Neil Zeghidour, Andrea Agostinelli

Published on: February 06, 2024

Impact Score: 8.07

Arxiv code: Arxiv:2402.04229

Summary

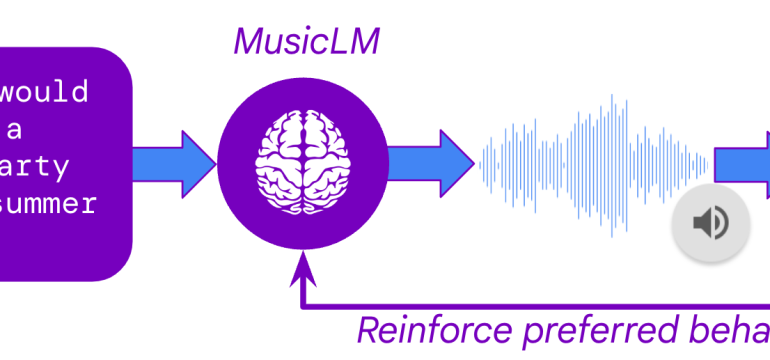

- What is new: MusicRL is the first music generation system fine-tuned from human feedback, overcoming the subjectivity in musical appreciation by incorporating reinforcement learning and human evaluations.

- Why this is important: Generating music that aligns with subjective human preferences and the specific intentions behind textual descriptions is challenging due to the variability in musical tastes and the difficulty in training models to understand these nuances.

- What the research proposes: Introduce MusicRL, a system that fine-tunes a pretrained MusicLM model with reinforcement learning and human feedback to create music that better adheres to text descriptions and audio quality preferences.

- Results: Both versions of MusicRL (MusicRL-R and MusicRL-U) outperformed the baseline, with the combined version, MusicRL-RU, being the most preferred by human raters. Ablation studies highlight the complexity of musical preferences beyond text adherence and quality.

Technical Details

Technological frameworks used: Autoregressive MusicLM, Reinforcement Learning from Human Feedback (RLHF)

Models used: MusicLM finetuned into MusicRL-R and MusicRL-U

Data used: 300,000 pairwise preferences from deployed MusicLM

Potential Impact

Music production and streaming services could be significantly impacted by adopting MusicRL, offering a more personalized and engaging user experience.

Want to implement this idea in a business?

We have generated a startup concept here: TuneCraft.

Leave a Reply