Authors: Dongping Chen, Ruoxi Chen, Shilin Zhang, Yinuo Liu, Yaochen Wang, Huichi Zhou, Qihui Zhang, Pan Zhou, Yao Wan, Lichao Sun

Published on: February 07, 2024

Impact Score: 8.22

Arxiv code: Arxiv:2402.04788

Summary

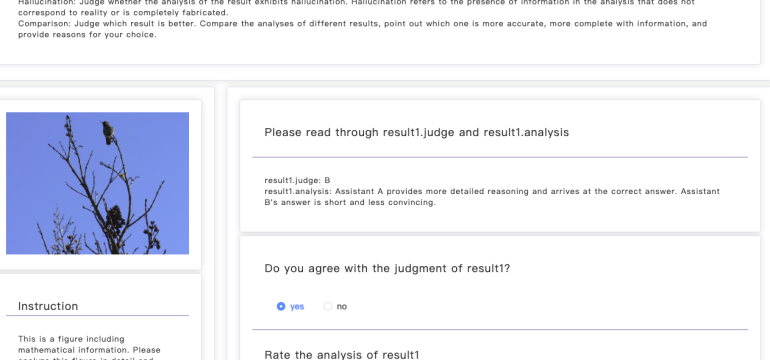

- What is new: Introduction of a new benchmark, MLLM-as-a-Judge, to assess MLLMs in tasks reflecting human judgment preferences.

- Why this is important: Lack of multimodal benchmarks aligned with human preferences for assessing the utility of Multimodal Large Language Models (MLLMs).

- What the research proposes: A novel benchmark called MLLM-as-a-Judge, encompassing Scoring Evaluation, Pair Comparison, and Batch Ranking tasks.

- Results: MLLMs show human-like abilities in Pair Comparisons but substantial divergence in Scoring Evaluation and Batch Ranking tasks, alongside challenges like biases, hallucinations, and inconsistencies.

Technical Details

Technological frameworks used: MLLM-as-a-Judge for evaluations.

Models used: GPT-4V, among other advanced MLLMs.

Data used: A specially curated dataset for benchmarking, accessible via provided URL.

Potential Impact

Legal technology, EdTech, and content moderation platforms could be significantly impacted by the developments and insights from this research.

Want to implement this idea in a business?

We have generated a startup concept here: JudgeMate.

Leave a Reply