Authors: Zeyu Liu, Gourav Datta, Anni Li, Peter Anthony Beerel

Published on: January 20, 2024

Impact Score: 8.15

Arxiv code: Arxiv:2402.04882

Summary

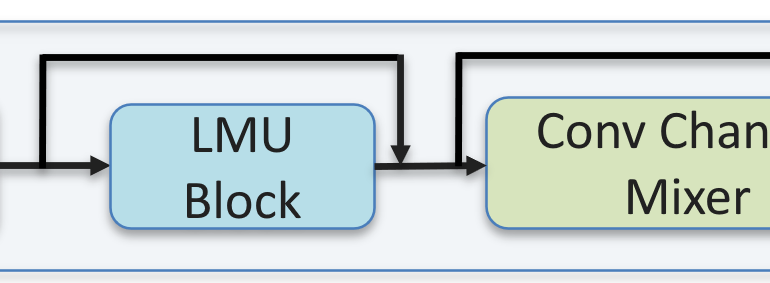

- What is new: Introduction of LMUFormer, which augments Legendre Memory Units (LMU) with convolutional patch embedding and a convolutional channel mixer, including a spiking version to reduce computing complexity.

- Why this is important: Transformer models’ high complexity and lack of sequential processing capability limit their use in streaming applications on resource-constrained devices.

- What the research proposes: LMUFormer model that retains sequential processing abilities of RNNs while approaching the performance of Transformer models, with much lower computational costs.

- Results: LMUFormer achieves comparable performance to state-of-the-art Transformer models on the SCv2 dataset with 53 times fewer parameters and 65 times less computing complexity. It also supports efficient real-time data processing with a 32.03% reduction in sequence length and minimal performance loss.

Technical Details

Technological frameworks used: nan

Models used: LMUFormer, Legendre Memory Units (LMU), convolutional patch embedding, convolutional channel mixer, spiking neural network architecture

Data used: Multiple sequence datasets

Potential Impact

Streaming applications, edge computing devices manufacturers, and companies specializing in real-time data analysis and processing could benefit or be disrupted.

Want to implement this idea in a business?

We have generated a startup concept here: StreamTechAI.

Leave a Reply